Globally Constrained Structured Learning

.png)

However, pairwise MRFs consider only the local dependencies between the variables and are unable to account the global statistics that depend on the whole set of variables (or a large subset of them). But the knowledge about the global statistics on the hidden variables is often available in real-world problems, e.g. in text denoising the frequencies of the letters in natural language are known; in multi-label classification the minimal and maximal number of chosen labels can be specified by the problem; in image segmentation the data can be already weakly labeled; there can be additional knowledge about object’s shape, structure, etc. Formally, global constraints involve the whole set of hidden variables and hence the graph of MRF need to have the full clique over the hidden variables. However, recently there appeared several approaches that allow to take into account some kinds of global information.

We generalize structural SVM (SSVM) to a new formulation when the knowledge about some global statistics is assumed to be known at the prediction time. Our idea is that incorporating the global statistics of training data into the learning process leads to better probabilistic models and this improvement already have been reported on several real-world datasets. That fact encourages to explore tractable methods of performing such a globally constrained learning. We explore the difficulties of overgenerative LP-relaxation SSVM which has been reported as one of the most accurate structural learning algorithm, and suggest effective globally constrained training algorithm that converges to the result of overgenerative cutting-plane SSVM without using computationally hard LP-relaxation.

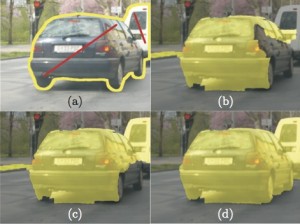

Semantic segmentation: improvement of the probabilistic model by using globally constrained structured learning; (a) ground-truth segmentation, objects of the target class (cars) are framed with yellow line, red lines correspond to used-defined seeds; (b) segmentation without using the global constraints (seeds); (c) segmentation obtained by constrained inference but using the model trained with conventional (unconstrained) SSVM; (d) segmentation obtained by constrained inference using the model trained with taking the global constraints into account.

Have you spotted a typo?

Highlight it, click Ctrl+Enter and send us a message. Thank you for your help!

To be used only for spelling or punctuation mistakes.