The paper "On Power Laws in Deep Ensembles" accepted as a spotlight to NeurIPS'20

Устный доклад сотрудников Лаборатории на одной из крупнейших конференций по ИИ.

The paper “On Power Laws in Deep Ensembles” authored by the employees of the Samsung-HSE Laboratory Ekaterina Lobacheva, Nadezhda Chirkova, Maxim Kodryan and Dmitry Vetrov was accepted for a spotlight presentation at the Neural Information Processing Systems (NeurIPS) 2020 conference. NeurIPS is one of the world's leading scientific conferences on artificial intelligence, annually bringing together leading experts in the field at one site. This year, due to the pandemic, the conference will be held online. A total of 9454 articles were submitted to NeurIPS'20, of which 1900 articles were accepted for publication, including 280 spotlight and 105 oral presentatons. Due to the large number of papers, the majority of works are presented at the conference in the form of posters so that each conference participant can choose his or her own subset of interesting papers to listen about, and the grid of spotlight and oral reports is made up of papers that received the highest ratings from reviewers.

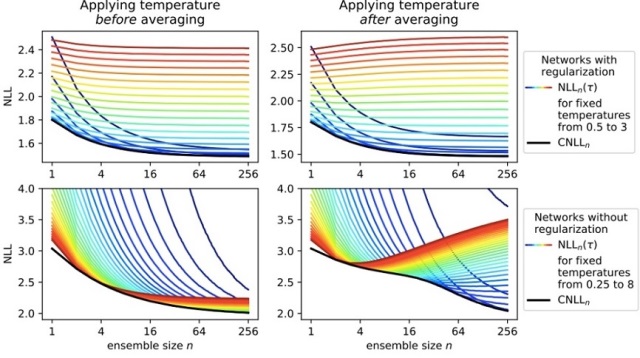

The paper of the FCS researchers is devoted to the study of the laws according to which the quality of ensembles of neural networks changes. Ensembling is a simple procedure that involves training multiple neural networks and averaging their predictions. Averaging reduces the number of model errors and also helps the model to assess more accurately whether it is confident in its predictions or whether it is better to abandon the prediction and use an alternative source of information. For example, when recognizing a fingerprint in a smartphone, a model can correctly recognize it in 99% of cases, and in 1% of cases, refuse to predict and ask the user for a password. The more neural networks in the ensemble, the higher the quality, but the slower the prediction calculation (for example, the slower fingerprint recognition). The paper “On Power Laws in Deep Ensembles” examines how the quality of ensemble confidence prediction changes with an increase in the number of neural networks, and the discovered law helps determine the minimum number of neural networks needed to achieve the desired level of quality. Researchers at the Samsung-HSE Laboratory plan to continue working in this direction.

Dmitry Vetrov

Laboratory Head

Maxim Kodryan

Research Assistant

Ekaterina Lobacheva

Research Fellow

Nadezhda Chirkova

Research Fellow