The faculty members will present their research on ICLR and AISTATS conferences

The 22nd International Conference on Artificial Intelligence and Statistics (AISTATS) will be held in Naha, Okinawa, Japan from April 16 to April 18, 2019. It has rating A according to CORE.

This year, on the main track of the conference Dmitry Molchanov, Valery Kharitonov, Artem Sobolev and Dmitry Vetrov, researchers of Bayesian research group, will present their paper "Doubly Semi-Implicit Variational Inference". They proposed an extension of the Variational Inference framework for using semi-implicit prior distributions.

From May 6 to May 9, 2019, the 7th International Conference on Learning Representations (ICLR) will be held in New Orleans, USA. ICLR is one of the fastest growing artificial intelligence conferences in the world. The number of article submissions this year amounted to 1591 (of which 524 were accepted).

This year, on the main track of the ICLR conference three papers will be presented by faculty members:

-

Variance Networks: When Expectation Does Not Meet Your Expectations (authors: Kirill Neklyudov, Dmitry Molchanov, Arsenii Ashukha, Dmitry Vetrov)

-

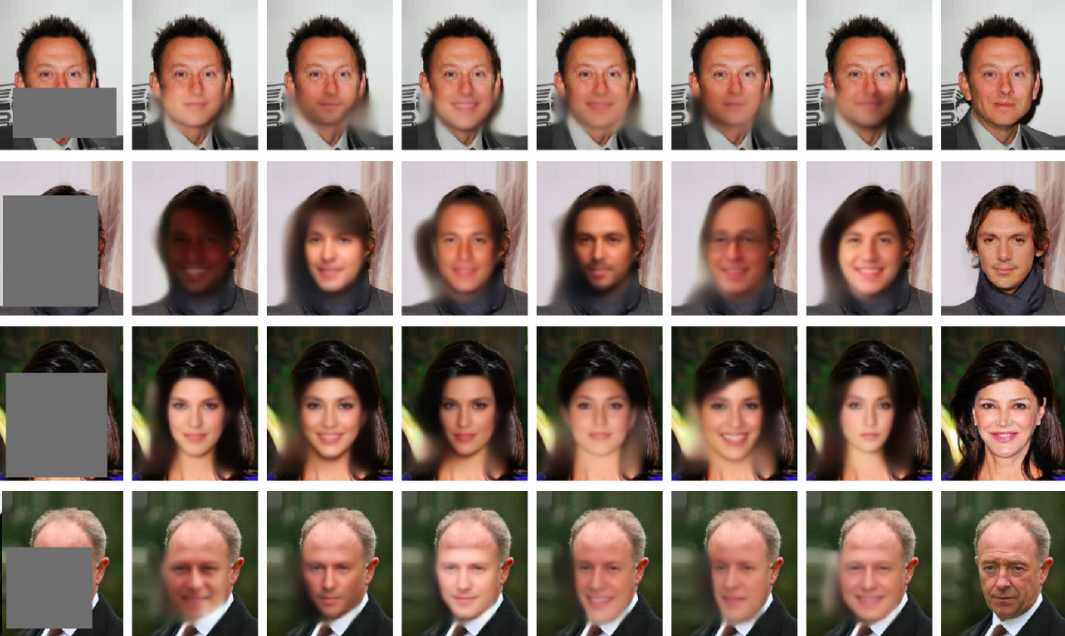

Variational Autoencoder with Arbitrary Conditioning (authors: Oleg Ivanov, Michael Figurnov, Dmitry Vetrov)

-

The Deep Weight Prior (authors: Andrei Atanov, Arsenii Ashukha, Kirill Struminsky, Dmitriy Vetrov, Max Welling)

Kirill Neklyudov

PhD student, research fellow at Samsung-HSE Laboratory

“

In "Variance Networks: When Expectation Does Not Meet Your Expectations" we found that Bayesian neural networks with Gaussian variational approximation with zero mean and where the only variance is training learns surprisingly well. It raises very counter-intuitive implications that neural networks not only can withstand an extreme amount of noise during training but can actually store information using only the variances of this noise.

Dmitry Vetrov

Research Professor

“

Two papers, “The Deep Weight Prior” and “Doubly Semi-Implicit Variational Inference”, focus on semi-implicit generative models, where distribution is modeled in an implicit way without access to its density function. This approach allows modeling complicated distributions in high-dimensional spaces, e.g. in a space of neural network weights. At the moment, we are continuing research in this direction.

.jpg)