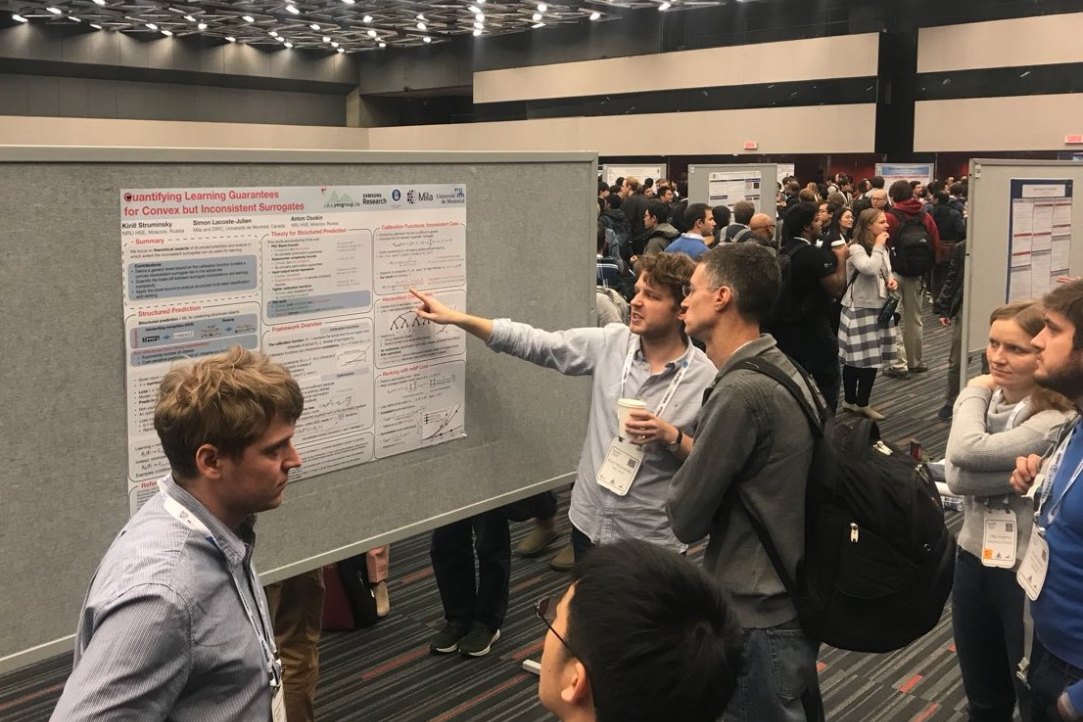

The faculty presented the results of their research at the largest international machine learning conference NeurIPS

From December 2 to December 8, 2018, the 32nd Neural Information Processing Systems (NeurIPS 2018) international conference was held in Montreal. Every year, NeurIPS gathers thousands of machine learning researchers who present their scientific results in the field of deep learning, reinforcement learning, scalable optimization, Bayesian methods, and other subsections of machine learning. The number of articles submitted to the conference is growing every year, and this year it amounted to 4854 (of which 1019 were accepted).

This year, on the main track of the conference three papers from the Faculty of Computer Science were presented: “Loss Surfaces, Mode Connectivity, and Fast Ensembling of DNNs” (spotlight by Timur Garipov, Pavel Izmailov, Dmitry Podoprikhin, Dmitry Vetrov and Andrew Gordon Wilson), “Quantifying Learning Guarantees for Convex but Inconsistent Surrogates” (poster report by Kirill Struminsky, Simon Lacoste-Julien and Anton Osokin), both from the Bayesian research group, and “Non-metric Similarity Graphs for Maximum Inner Product Search” (poster report by Stanislav Morozov and Artem Babenko) from the Joint Department with Yandex.

Dmitry Vetrov, a research professor at the Faculty of Computer Science, was invited to give a talk on workshop on Bayesian Deep Learning. He told about semi-implicit probabilistic models — models that combine the flexibility of implicit probabilistic models (such as GAN) and the simplicity of explicit training (for example, Normalizing Flows).

In addition, several papers from Bayesian research group (employees of the Centre for Deep Learning and Bayesian Methods, Samsung-HSE Laboratory, the Center for Artificial Intelligence of Samsung in Moscow) were presented on workshops:

- Importance Weighted Hierarchical Variational Inference (by Artem Sobolev and Dmitry Vetrov)

- Variational Dropout via Empirical Bayes (by Valery Kharitonov, Dmitry Molchanov and Dmitry Vetrov)

- Subset-Conditioned Generation Using Variational Autoencoder With A Learnable Tensor-Train Induced Prior (by Maxim Kuznetsov, Daniil Polykovskiy and Dmitry Vetrov)

- Joint Belief Tracking and Reward Optimization through Approximate Inference (by Pavel Shvechikov, Alexander Grishin, Arseny Kuznetsov, Alexander Fritsler and Dmitry Vetrov)

- Bayesian Sparsification of Gated Recurrent Neural Networks (by Ekaterina Lobacheva, Nadezhda Chirkova and Dmitry Vetrov)

Dmitry Vetrov

Research Professor

“

In “Loss Surfaces, Mode Connectivity, and Fast Ensembling of DNNs” we explored and empirically investigated an important feature of neural networks (NNs): it turns out that the independent optima of NN based loss functions are in fact connected by simple continuous curves over which training loss and test accuracy are nearly constant. This opens up possibilities for more efficient ensembling of neural networks. At the moment, we are continuing research in this direction.

Junior Research Fellow

.jpg)