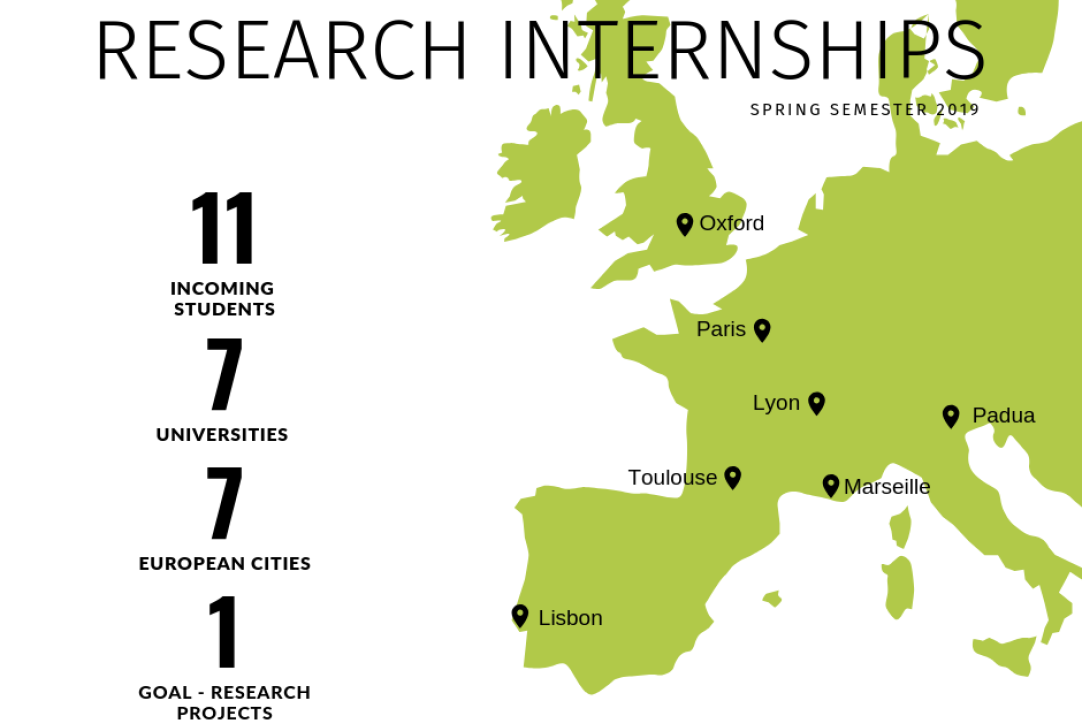

Faculty of Computer Science Hosted 11 International Students for Research Internships This Spring

They represented various universities, such as École normale supérieure, University of Oxford, University of Padua, University of Toulouse, École Centrale de Marseille, INSA de Lyon, Instituto Superior Técnico in Lisbon.

One of them was Diego Granziol, a PhD student at Oxford University, who spent over 2 months doing a project at the Centre of Deep Learning and Bayesian Methods. He told us about his experience and project at HSE Faculty of Computer Science:

During the course of my stay I worked at Bayesian Methods Research Group headed by Prof. Dmitry Vetrov, and my main work was with Timur Garipov, a member of the Group. We initially began by implementing his code base into my previous submission (spectral stochastic gradient descent) and generating some theoretical results for his previous submission (stochastic weight averaged gaussians).

Interestingly, although perhaps somewhat disappointingly, we noted that the whilst the curvature learned at the start of training was very close to those of the hand-picked schedules, the associated decrease in learning rate would blow up the eigenspectrum, which would decrease the learning rate further, grinding learning to a halt. Furthermore one could prove for the convex quadratic that PCA used by SWAG would not give you the same eigenbasis as that of the underlying convex quadratic.

Our next endeavour focused on understanding generalisation, we looked at the work of Ledoit and Wolf for large dimensional covariance matrices from multi-variate statistics and implemented the shrinkage co-efficient for deep neural networks in an attempt to learn the L2 weight decay. We noted that the weight decay co-efficient learned from the spectrum using our implementation matched the hand picked grid searched decay reasonably well and that it increased during training, during which the trust of the empirical hessian would decrease due to over-fitting. Results looked promising until the end of training where the learning rate was decreased, spectral width and spectral variance increased to a level that the confidence in the empirical hessian was 0 halting learning and giving poor performance. This has since been rectified by choosing learning rate schedules where the learning rate is not reduced so aggressively.

The last piece of work, which has been submitted to NeurIPS and to the ICML workshop “Theoretical Physics in Deep Learning”, where it was accepted and presented last week, focused on understanding generalization from the perspective of the deviations of the true risk surface to the empirical risk surface. This was a particularly tough week as my research partner had his examinations and constant visa appointments, so most of the paper writing, idea and the entirety of the maths, which was based on low rank perturbations of the Wigner ensemble, was a one man job. An application of the relevance of this deviation was presented as a reason as to the inability for curvature based optimizers to generalise effectively. Extensive experiments were given, using data augmentation on spectra, batch spectra, and newton methods on logistic regression as a toy example.

I cannot stress enough how lucky and privileged I was to be given the opportunity to come to Russia, work with the Bayes group. The atmosphere was warm and welcoming. Timur is a truly amazing coder and I wouldn’t have been able to make any progress along any of my own ideas without him, or learned about other very relevant ones, I think he has the potential to do some really exceptional work and I am excited to follow his progress. Dmitry’s final input to the paper we sent to NeurIPS was absolutely vital and I thank him for his regular meetings, input, questions, support and time.

I hope to have the opportunity to return and work with Bayesian Methods Research Group again.

Learn more about internship opportunities at the HSE Faculty of Computer Science